Prefix Search in Node.js using Knex.js and PostgreSQL

How to create a prefix search in a Node.js service using Knex.js and a Postgres database

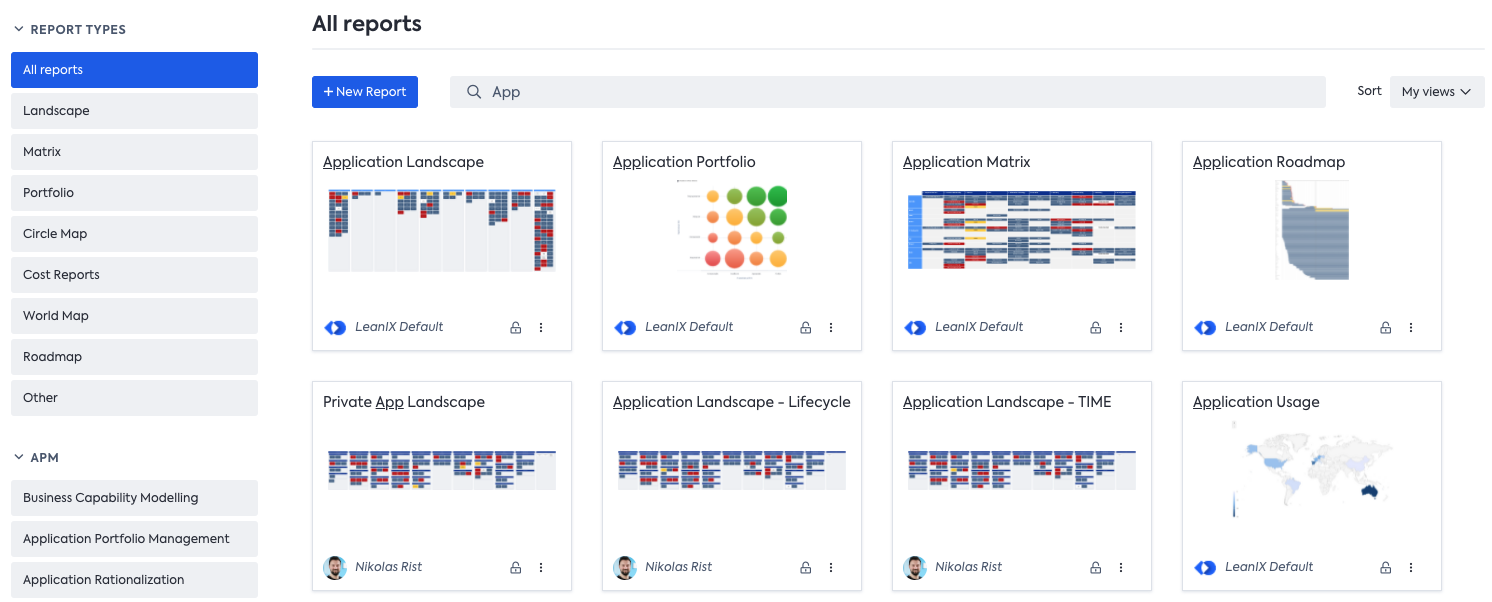

We want to enable LeanIX users to easily discover various entities such as diagrams, saved searches, dashboards, and reports with a consistent user experience. One important feature is the ability to search for the names of such entities. This search works with a prefix search logic and only applies to the name of an entity.

In our engineering team, we are iteratively moving responsibilities out of our largest and oldest backend service into smaller scoped microservices. Therefore, we introduced a new navigation domain, and we built a new service. As we cannot replace the old functionality at once, the new service creates its secondary storage by receiving all necessary events of the different navigational entities via our Azure Event Hub and creates its own Navigation Items. To learn more about how our event hub helps us build scalable backend services, I recommend watching the talk Distributed Data: On Event Carried State Transfer by my colleague Per Bernhardt on the Bonn Code YouTube channel.

As of today, this new service serves two of three main navigation areas, the reports, and diagrams navigation. In this article we will dive into what prefix search is about and how we implemented it within our new domain.

Prefix search explained

Prefix search means, that the search term is only a prefix of a word within the name. It does not matter where the word is placed within the name, as long as whitespace is in between. The search term can have multiple words as long as they match a whole part of the name, and the last phrase can be a prefix of the last word matching.

Sounds complicated, let's walk through an example.

Imagine we have three Navigation Items with the following names:

- Item 1: Foo Bar

- Item 2: FooBar

- Item 3: Bar Foo

The following table shows some search results with different search terms, when using a prefix search. The underlines highlight the matching prefix within the name.

| Search term | Found items |

|---|---|

| Foo | Foo Bar, FooBar, Bar Foo |

| Foo Ba | Foo Bar |

| Ba | Foo Bar, Bar Foo |

| FooBa | FooBar |

| Bar F | Bar Foo |

White spaces are counted in, so it is not possible to find items 1 and 2 with the term Foo B or vice versa with FooB. This allows you to match multiple words from the beginning of the name, as long as proper white spaces are part of the search term.

Postgres full-text search

The blog post title already reveals, we implemented the Navigation service as a Node.js service with a Postgres database. In addition, we use Knex.js, which provides an abstraction of a query builder, but not a full ORM (Object Relational Mapping). In general, a good starting point to learn about the full-text search capabilities in Postgres is the documentation.

Prefix search - How?

You might ask yourself, why do they use Postgres? The simple answer is, we try to stay as close as possible to the technical stack that our team is familiar with (Node.js and PSQL) while cutting the Navigation out of the old service.

Therefore, we started with a research phase upfront, as it was not clear if we can build this specific kind of search within Postgres. The old service uses an ElasticSearch to run the search queries.

Postgres provides a text search functionality out of the box, which looked promising in the beginning, so we dug into it. The Postgres text search runs on documents, these represent a text to search in. This document is prepared to search on or can be created on the fly. Every document is transformed in a vector, so-called tsvector.

Throughout the research, we created a wrapper function for the out of the box to_tsvector() function from Postgres, with basic parameters. This allows us to change the behavior of the function in one place and use it later in multiple code segments without need to touch the code. In theory, you can use different languages to create the tsvector from the document, but we decided to use the default dictionary.

For now, we do not see the need of detecting languages on the fly to improve the search results. The benefit of using a specific language is that Postgres can remove specific stop words etc. better from the document. As we are only searching on names, this is not necessary at this moment. Maybe it becomes relevant in the future if we want to allow searching on the description of a Navigation Item. The below SQL code example is from our migration step to create the function when the service is deployed.

CREATE OR REPLACE FUNCTION lx_tsvector(text text) RETURNS tsvector AS

$BODY$

BEGIN

RETURN (select to_tsvector('simple', text));

END;

$BODY$

IMMUTABLE

language plpgsql;The function is written in PL/pgSQL, a loadable procedure language for Postgres. We omit further details in this blog post.

Let's take a look how such a tsvector looks like with the small example document Hello World!.

select lx_tsvector('Hello World!');

lx_tsvector

---------------------

'hello':1 'world':2As you can see, the vector has the length of 2 and both words are transformed to lowercase and normalized, called lexemes, and all so-called stop words are removed. Stop words are words/characters in a text which often occur without important information added to the text semantically. For example, and, as well as special punctuation characters or other special characters. In the second example, the !, + and the _ are removed and not present in the tsvector:

select lx_tsvector('Hello World!+_');

lx_tsvector

---------------------

'hello':1 'world':2For text search within Postgres you need the text search operator @@, the tsvector you are searching on, and a respective tsquery, which is a string with additional syntax, how the lexemes can occur within the tsvector. In our use case, we want to allow searching for multiple words separated by white spaces. Therefore, we use a built-in phraseto_tsquery() function, which creates automatically a proper tsquery out of phrases. The default to_tsquery() function accepts a single lexeme input only. We will explain the exact creation of our query along with our code example later.

select phraseto_tsquery('Hello World!');

phraseto_tsquery

---------------------

'hello' <-> 'world'Query example

Now we have our custom lx_tsvector() function in place, to create a proper ts_vector out of the name column of our Navigation Item. We still do not have the full tsquery we need, to have proper prefix search implemented. Next to the phraseto_tsquery() function, we also use some specific tsquery syntax to achieve the final prefix search behavior as described above. The next code snippet shows our necessary PSQL statement for the where clause, which defines if a row (Navigation Item) is found by the search term or not.

lx_tsvector(name) @@ (phraseto_tsquery('simple', ?)::text || ':*')::tsqueryYes, this one line of code is all we need to have proper prefix search implemented. When we started this journey we expected a complex query composed out of different functions etc. but that's it. One single line of code does all the magic. Now, let's split this up into its parts to get a proper understanding of what is happening, along with an example.

The left part of the search operator, @@, lx_tsvector(name) creates the ts_vector out of each column we need to query on. Imagine we have a Navigation Item with the name Hello World Report!. Then the respective ts_vector would look like this: 'hello':1 'world':2 'report':3.

The right part of the operator creates our tsquery in multiple steps. Let's cut this down as well.

The first part phraseto_tsquery('simple', ?)::text creates first a tsquery out of a phrase and converts it to a string, which I will explain in the next step. The question mark is used to put in the search term as a parameter, this provides us some security and prevents SQL injection. Imagine our search term is Hello World, then the respective query string will look like this: 'hello' <-> 'world'. You can read the query string like: “In the vector, I am looking for, hello is followed directly by world and nothing else must stay in between.”

The second thing that happens is defined by the two pipes || which is just a string concatenation. This is the reason, why we need to cast the previously created tsquery into a text with ::text otherwise this would fail.

This concatenated string is cast to a tsquery which looks finally like this: 'hello' <-> 'world':*. The magic part here, to make it a prefix search, is the :* which means in the text search syntax, that it does not matter which characters come after this part. Just a “simple” wild card, like in other languages.

So, in total our query now can be read like this: “A vector matches if one element is hello followed by the next element world followed by any other characters within the same element e.g., worldTwo, and any other following elements.”

Node.js 🤝 Postgres - How to connect the service?

Now we have all necessary queries on the database level, but how can we run these queries out of our Node.js service? We do this with the aforementioned Knex.js query builder library. The main benefit of using Knex.js for our implementation is that you have a lot of flexibility in building the query. You can mix high-level abstraction functions for select or join with raw SQL statements, which might not be supported in a full ORM at all.

Let's dive into this in more detail now.

Code example

The code example below shows a simplified query reduced to the search part only. In production, we have a much more complex query to support authorization, paging, filtering, sorting, and other functionality, which would make the code too complex to understand the basics in this context. How to use such Knex.js query builder, especially with typescript, is as well described in the blog post by Fabian Böller and will be omitted here.

let queryBuilder = pg<NavigationItemSearch>('navigation_items')

.select(

'navigation_items.id',

'navigation_items.description',

'navigation_items.group_key',

'navigation_items.name',

'navigation_items.owner',

'navigation_items.predefined',

'navigation_items.type'

)

.whereRaw(

"lx_tsvector(name) @@ \

(phraseto_tsquery('simple', ?)::text || ':*')::tsquery",

[searchTerm]

);

This small example already shows the benefit described above, that we can mix up abstraction functions with raw SQL.

The queryBuilder is later used in multiple sub-functions which attach different query parts depending on the request made by the frontend.

One example function is the following, adding a filter to find only Navigation Items the user is the owner of:

function attachOwnedByMeFilter(

queryBuilder: Knex.QueryBuilder,

userId: string,

ownedByMe?: boolean

): Knex.QueryBuilder {

return ownedByMe === true

? queryBuilder.andWhere('navigation_items.owner', userId)

: queryBuilder;

}Here the where-clause is only attached if a specific query parameter is set in the request, otherwise, the previous queryBuilder is returned. This allows us nice and readable chaining for different use cases.

Let's add this to our previous example and add the function signature which ends up in this full example function:

async search(

pg: Knex | Knex.Transaction,

workspaceId: string,

userId: string,

searchOptions: SearchOptions

): Promise<NavigationItemSearch[]> {

let queryBuilder = pg<NavigationItemSearch>('navigation_items')

.select(

'navigation_items.id',

'navigation_items.description',

'navigation_items.group_key',

'navigation_items.name',

'navigation_items.owner',

'navigation_items.predefined',

'navigation_items.type'

)

.whereRaw(

"lx_tsvector(name) @@ \

(phraseto_tsquery('simple', ?)::text || ':*')::tsquery",

[searchTerm]

);

queryBuilder = attachGroupKeyConstraints(

queryBuilder, searchOptions.groupKeys

);

queryBuilder = attachSharedWithMeFilter(

queryBuilder, pg, userId,

workspaceId, searchOptions.sharedWithMe

);

queryBuilder = attachOwnedByMeFilter(

queryBuilder, userId, searchOptions.ownedByMe

);

queryBuilder = attachCollectionConstraints(

queryBuilder, searchOptions.collectionId

);

queryBuilder = attachSearchTermTsQuery(

queryBuilder, searchOptions.searchTerm

);

queryBuilder = attachSorting(

queryBuilder, pg, userId, workspaceId, searchOptions.sort

);

return queryBuilder;

}This example above just gives an idea of how we attach different aspects of the query depending on different query parameters from the original request. It is not necessary to fully understand what the different functions are about in detail in this case.

Performance

This small analysis should give an idea of the performance of the query on our largest table. Therefore, we use different queries and inspect them via the analysis functionality within Postgres. The table we are talking about contains 392.240 Navigation Items while writing the post. Normally, the search works on Workspace scope, which will be analyzed as well.

Each customer can have multiple Workspaces to work in, and they are completely separated from each other. Therefore, we need the scope, to only return Workspace related Navigation Items to the user. In other words, Workspaces are a logical separation of the customers' data.

Our search term will be Application. This is a really common word within names of our Navigation Items and should return numerous results.

The table we are searching on is the navigation_items table. The following code snippet shows the necessary columns and indexes of the table, how it is stored in our databases.

The gin index uses the custom lx_tsvector() function on the name column.

Table "public.navigation_items"

Column | Type

--------------------------+------------

id | uuid

workspace_id | uuid

name | text

Indexes:

"navigation_items_pkey" PRIMARY KEY, btree (id, workspace_id)

"navigation_items_lx_tsvector_idx" gin (lx_tsvector(name))Let's define our query we want to analyze:

EXPLAIN ANALYZE SELECT id, name FROM NAVIGATION_ITEMS

WHERE lx_tsvector(name) @@

(phraseto_tsquery('simple', 'Application')::text || ':*')::tsquery;The EXPLAIN and ANALYZE key-words are from PSQL itself. EXPLAIN provides you the execution plan and in addition with ANALYZE it executes the query and provides actual run times. Find more details and explanation about EXPLAIN and ANALYZE in the documentation.

QUERY PLAN

-------------------------------------------------------------------------

Bitmap Heap Scan on navigation_items

(cost=683.43..40518.20 rows=76262 width=41)

(actual time=46.643..190.579 rows=81414 loops=1)

Recheck Cond: (lx_tsvector(name) @@ '''application'':*'::tsquery)

Heap Blocks: exact=13808

-> Bitmap Index Scan on navigation_items_lx_tsvector_idx

(cost=0.00..664.36 rows=76262 width=0)

(actual time=44.868..44.868 rows=82536 loops=1)

Index Cond: (lx_tsvector(name) @@ '''application'':*'::tsquery)

Planning Time: 0.349 ms

Execution Time: 195.522 msThe execution plan shows us, that we are using navigation_items_lx_tsvector_idx index during a Bitmap Index Scan within a Bitmap Heap Scan on the full table. The total execution time for the query is 195.522ms.

Let's add another WHERE clause to narrow down the scope to a specific workspace and see how the performance changes.

EXPLAIN ANALYZE SELECT id, name, workspace_id FROM NAVIGATION_ITEMS

WHERE lx_tsvector(name) @@

(phraseto_tsquery('simple', 'Application')::text || ':*')::tsquery

AND workspace_id='891b3034-41a7-4adf-9d86-86deaad7fe3d';Adding the Workspace scope provides a huge performance improvement, as you can see in the new query plan.

QUERY PLAN

-----------------------------------------------------------------------------

Index Scan using navigation_items_pkey on navigation_items

(cost=0.42..12680.52 rows=12 width=41)

(actual time=2.244..14.895 rows=20 loops=1)

Index Cond: (workspace_id = '891b3034-41a7-4adf-9d86-86deaad7fe3d'::uuid)

Filter: (lx_tsvector(name) @@ '''application'':*'::tsquery)

Rows Removed by Filter: 55

Planning Time: 0.214 ms

Execution Time: 14.910 msThe execution time decreased from 195.522ms to 14.910ms and you can see that the database changes the approach as well. It is not necessary anymore to use the gin index for the search, in addition, we can do a simple Index Scan instead of the previous Bitmap Heap Scan. For finding the matching items, it just uses a simple filter instead of a Bitmap Index Scan.

Let's take the next step and introduce an index on workspace_id to check if this can even improve the performance of the search. You can create such an index with CREATE INDEX ON navigation_items (workspace_id);, this creates an index with the default name navigation_items_workspace_id_idx to use a custom name you can write CREATE INDEX my_custom_index ON navigation_items (workspace_id); instead. In most cases, the default naming pattern of Postgres is sufficient and less error-prone.

Now, with the index in place, let's analyze the query again, and we get the following query plan.

QUERY PLAN

--------------------------------------------------------------------------------

Index Scan using navigation_items_workspace_id_idx on navigation_items

(cost=0.42..86.37 rows=12 width=57) (actual time=0.307..0.884 rows=20 loops=1)

Index Cond: (workspace_id = '891b3034-41a7-4adf-9d86-86deaad7fe3d'::uuid)

Filter: (lx_tsvector(name) @@ '''application'':*'::tsquery)

Rows Removed by Filter: 55

Planning Time: 0.636 ms

Execution Time: 0.945 msThe index on the workspace_id column provides us another significant performance boost from ~14ms to ~<1ms. With this execution time, we have a fast and simple prefix search query in place to serve a nice search experience for the user.

Conclusion

In this blog post, we explored how you can implement a prefix search with a PostgreSQL database and a Node.js service. The most complex part was to figure out how to achieve the prefix search as tsquery and which tsvector you should use. It showed up to be a single line of query, which was not expected in the beginning and already the default tsvector is enough. We could improve the performance by adding a scope and narrow down the number of items to search in. This makes the new setup powerful for us, as it serves all needs to the user, and we do not have to extend our technical stack within the team.

One key fact you should take away is:

Try first to solve your problem with the tools at hand and if that doesn't work, start researching new technologies that solve your problem. Do not just use new technology because someone tells you.

Nevertheless, stay open-minded for new things and always reflect your current technical stack, if it is still valid to stay in use or needs to be adapted.

Published by Nikolas Rist

Visit author page

💬 Discuss this article on Twitter