Leveraging predefined prompts in MCP

In this final blog post of the MCP series, we will take a look at prompts. Prompts are used to help users run the right tools.

Prompts are a key part of MCP. They provide guided instructions for LLMs — structure, context, and direction — to help models use available tools effectively. They bridge user intent and tool execution and let users leverage predefined templates that:

- provide clear instructions for specific use cases

- increase the likelihood of selecting the right tools

- offer standardized ways to interact with complex systems

- reduce the cognitive load on users when working with multiple tools

Well‑crafted prompts don’t guarantee the perfect tool choice, but they significantly improve precision and effectiveness.

Prompts are user‑controlled: servers expose them to clients so users can explicitly select them. Typically, users trigger prompts via UI commands, which makes discovery and invocation natural. For a deeper dive into this topic, see MCP Prompts Explained.

MCP Prompt Specification

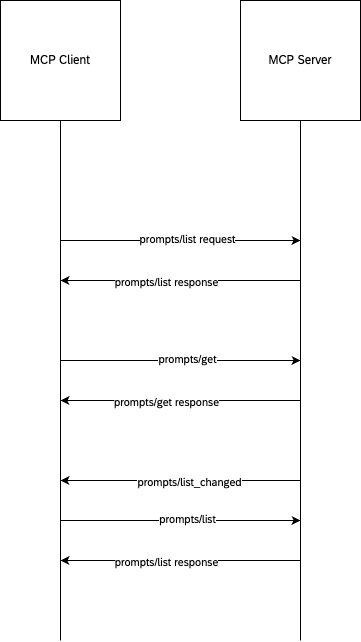

To understand how to implement prompts effectively, let's examine the official MCP specification. The MCP server can support these 3 prompt-related messages:

- Prompt listing: Used to discover available prompts by calling the

prompts/listrequest. This operation supports pagination for handling large numbers of prompts. - Prompt retrieval: Allows the user to retrieve a specific prompt via the

prompts/getrequest. - Prompt changes: Notifies the client about changes in the prompt listing by sending a notification to the client. This operation is only available when the prompts capability

listChangedis enabled.

Creating Prompts with FastMCP

Now that we understand the specification, let's implement some practical examples. If you've been following this MCP series, you already know how FastMCP provides annotations for registering tools and resources. The same convenient approach applies to prompts — FastMCP handles all the underlying complexity for us.

To get started, we need to update our main.py file to include prompt registration:

from fastmcp import FastMCP

from resources import register_resources

from tools import register_tools

from prompts import register_prompts

# STDIO by default

mcp = FastMCP(name="Demo 🚀")

# Register all prompts

register_prompts(mcp)

# Register all resources

register_resources(mcp)

# Register all tools

register_tools(mcp)

if __name__ == "__main__":

mcp.run()For this demonstration, we'll create two different types of prompts to showcase the versatility of the system:

- suggestActivity - A simple prompt for activity suggestions

- generateCode - A more complex prompt for code generation

Let's examine each one in detail.

suggestActivity

This prompt demonstrates a straightforward use case — generating activity suggestions based on a user-specified type.

from fastmcp.types import PromptMessage, TextContent

def register_prompts(mcp: FastMCP):

"""Register all prompts with the MCP instance"""

@mcp.prompt(

name="suggestActivity",

description="Returns a prompt to suggest activity",

tags={"activity", "fun"},

meta={"version": "1.1", "author": "papa smurf"}

)

def suggestActivity(type: str) -> str:

"""Generates a user message asking for a certain activity."""

return f"Can you please suggest a '{type}' activity?"generateCode

This prompt showcases a more sophisticated example that returns a structured PromptMessage object rather than a simple string. It's designed to request code generation based on specific programming language and task requirements.

from fastmcp.types import PromptMessage

@mcp.prompt(

name="generateCode",

description="Returns a code snippet",

tags={"code"},

meta={"version": "1.0", "author": "papa smurf"}

)

def generateCode(language: str, task_description: str) -> PromptMessage:

"""Generates a user message requesting code generation."""

content = f"Write a {language} function that performs the following task: {task_description}"

return PromptMessage(role="user", content=TextContent(type="text", text=content))

Understanding the Generated Schema

As we've discussed in previous posts in this MCP series, FastMCP automatically generates JSON schemas for all registered components, including prompts. This schema generation provides clients with the necessary metadata to understand how to interact with each prompt.

Let's examine the schemas generated for our two example prompts:

suggestActivity prompt presented as JSON (click to expand)

{

"key": "suggestActivity",

"name": "suggestActivity",

"description": "Returns a prompt to suggest activity",

"arguments": [

{

"name": "type",

"description": null,

"required": true

}

],

"tags": [

"fun",

"activity"

],

"enabled": true,

"title": null,

"icons": null,

"meta": {

"version": "1.1",

"author": "Jerry Lewis"

}

}generateCode prompt presented as JSON (click to expand)

{

"key": "generateCode",

"name": "generateCode",

"description": "Returns a code snippet",

"arguments": [

{

"name": "language",

"description": null,

"required": true

},

{

"name": "task_description",

"description": null,

"required": true

}

],

"tags": [

"code"

],

"enabled": true,

"title": null,

"icons": null,

"meta": {

"version": "1.0",

"author": "Jerry Lewis"

}

}Conclusion

This concludes our comprehensive exploration of the Model Context Protocol (MCP). Throughout this series, we've covered the fundamental concepts of MCP servers, explored tools and resources, and now examined how prompts enhance the user experience by providing guided interactions with LLM systems.

You now have the knowledge to create your own MCP server with tools, resources, and prompts. However, it's important to remember that the MCP ecosystem is rapidly evolving. Not all MCP clients support every feature, and some may have limitations when listing certain tools or handling specific functionalities.

As this field continues to mature, we can expect improvements in client implementations and broader adoption across the industry. Even major technology companies are working to keep pace with the rapid advancement of AI tooling, making MCP an exciting space to watch and participate in.

Published by...